AI Blogs - Page 16#

Distributed fine-tuning of MPT-30B using Composer on AMD GPUs

This blog uses Composer, a distributed framework, on AMD GPUs to fine-tune MPT-30B in single node as well as multinode

Vision Mamba on AMD GPU with ROCm

This blog explores Vision Mamba (Vim), an innovative and efficient backbone for vision tasks and evaluate its performance on AMD GPUs with ROCm.

Getting started with AMD ROCm containers: from base images to custom solutions

This post, the second in a series, provides a walkthrough for building a vLLM container that can be used for both inference and benchmarking.

Triton Inference Server with vLLM on AMD GPUs

This blog provides a how-to guide on setting up a Triton Inference Server with vLLM backend powered by AMD GPUs, showcasing robust performance with several LLMs

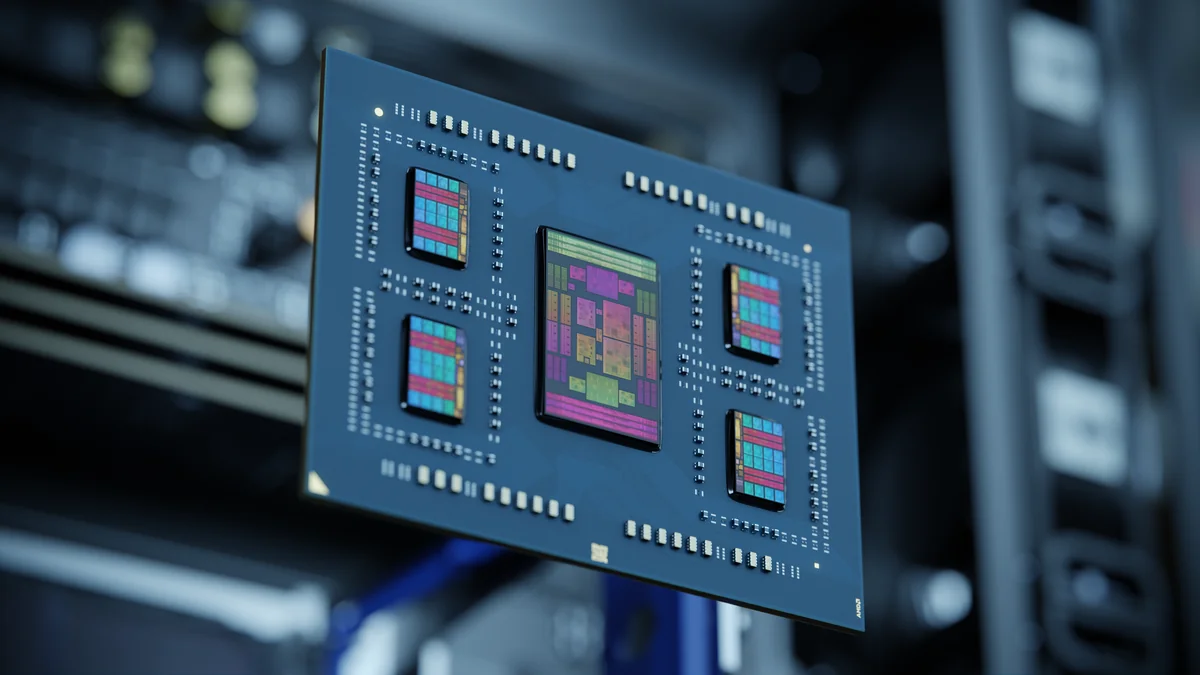

Training Transformers and Hybrid models on AMD Instinct MI300X Accelerators

This blog shows Zyphra's new training kernels for transformers and hybrid models on AMD Instinct MI300X accelerators, surpassing the H100s performance

Transformer based Encoder-Decoder models for image-captioning on AMD GPUs

The blog introduces image captioning and provides hands-on tutorials on three different Transformer-based encoder-decoder image captioning models: ViT-GPT2, BLIP, and Alpha- CLIP, deployed on AMD GPUs using ROCm.

SGLang: Fast Serving Framework for Large Language and Vision-Language Models on AMD Instinct GPUs

Discover SGLang, a fast serving framework designed for large language and vision-language models on AMD GPUs, supporting efficient runtime and a flexible programming interface.

Quantized 8-bit LLM training and inference using bitsandbytes on AMD GPUs

Learn how to use bitsandbytes’ 8-bit representations techniques, 8-bit optimizer and LLM.int8, to optimize your LLMs training and inference using ROCm on AMD GPUs

Distributed Data Parallel Training on AMD GPU with ROCm

This blog demonstrates how to speed up the training of a ResNet model on the CIFAR-100 classification task using PyTorch DDP on AMD GPUs with ROCm.

Torchtune on AMD GPUs How-To Guide: Fine-tuning and Scaling LLMs with Multi-GPU Power

Torchtune is a PyTorch library that enables efficient fine-tuning of LLMs. In this blog we use Torchtune to fine-tune the Llama-3.1-8B model for summarization tasks using LoRA and showcasing scalable training across multiple GPUs.

CTranslate2: Efficient Inference with Transformer Models on AMD GPUs

Optimizing Transformer models with CTranslate2 for efficient inference on AMD GPUs

Inference with Llama 3.2 Vision LLMs on AMD GPUs Using ROCm

Meta's Llama 3.2 Vision models bring multimodal capabilities for vision-text tasks. This blog explores leveraging them on AMD GPUs with ROCm for efficient AI workflows.