AI Blogs#

Primus-Pipeline: A More Flexible and Scalable Pipeline Parallelism Implementation

Learn how to use our flexible and scalable pipeline parallelism framework with Primus backend and AMD hardware.

FlyDSL: Expert GPU Kernel Development with the Ease of MLIR Python Native DSL on AMD GPUs

FlyDSL is a Python-first, MLIR-native DSL for expert GPU kernel development and tuning on AMD GPUs.

Unlocking Sparse Acceleration on AMD GPUs with hipSPARSELt

This blog post introduces semi-structured sparsity technology supported on AMD systems and explains how to use the corresponding library to leverage its benefit.

Advanced MXFP4 Quantization: Combining Fine-Tuned Rotations with SmoothQuant for Near-Lossless Compression

Showcase advanced algorithms available in AMD Quark for efficient MXFP4 quantization on AMD Instinct accelerators with high accuracy retention.

Elevate Your LLM Inference: Autoscaling with Ray, ROCm 7.0.0, and SkyPilot

Learn how to use multi-node and multi-cluster autoscaling in the Ray framework on ROCm 7.0.0 with SkyPilot

Building Robotics Applications with Ryzen AI and ROS 2

This blog post gives a walkthrough of how to deploy a robotics application on the AI PC integrated with ROS - the robot operating system. We showcase Ryzen AI CVML Library to do perception tasks like depth estimation and develop a custom ROS 2 node which allows easy integration with the ROS ecosystem and standard components.

Quickly Developing Powerful Flash Attention Using TileLang on AMD Instinct MI300X GPU

Learn how to leverage TileLang to develop your own kernel. Explore the power to fully utilize AMD GPUs

Accelerating llama.cpp on AMD Instinct MI300X

Learn more about the superior performance of llama.cpp on Instinct platforms.

Solution Blueprints: Accelerating AI Deployment with AMD Enterprise AI

This blog presents AIMs Solution Blueprints and demonstrates modular, Helm‑based deployment patterns.

Digital Twins on AMD: Building Robotic Simulations Using Edge AI PCs

Explore how Ryzen AI MAX enables robotic simulation on a single AI PC and take your first step into digital twins.

Resilient Large-Scale Training: Integrating TorchFT with TorchTitan on AMD GPUs

Achieve resilient, checkpoint-less distributed training on AMD GPUs by integrating TorchFT with TorchTitan on Primus-SaFE.

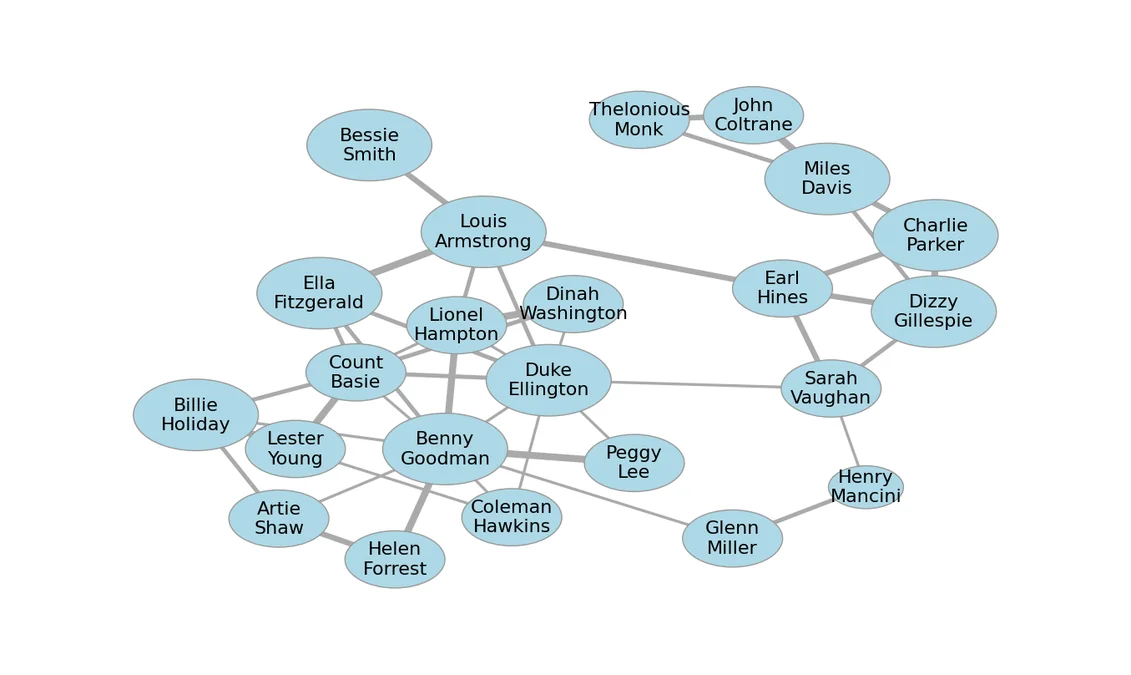

Accelerating Graph Layout with AI and ROCm on AMD GPUs

Case study of using AI coding agents to optimize graph layout using GPUs.

Adaptive Top-K Selection: Eliminating Performance Cliffs Across All K Values on AMD GPUs

Explore adaptive Top-K on MI300X! See how auto-selection and hardware optimizations like DPP and double buffering drive peak efficiency.

LLM Inference Optimization Using AMD GPU Partitioning

Demonstrate how to leverage compute and memory partitioning features in ROCm to scale model serving.

ROCm 7.2: Smarter, Faster, and More Scalable for Modern AI Workloads

we highlight the latest ROCm 7.2 enhancements for AMD Instinct GPUs, designed to boost AI and HPC performance

ROCm Becomes a First-Class Platform in the vLLM Ecosystem

ROCm is now a first-class vLLM platform: official wheels + Docker, stronger CI, and faster LLM & multimodal inference on AMD Instinct GPUs.

Stay informed

- Subscribe to our RSS feed (Requires an RSS reader available as browser plugins.)

- Signup for the ROCm newsletter

- View our blog statistics