Applications & models - Page 4#

Explore the latest blogs about applications and models in the ROCm ecosystem, including machine learning frameworks, AI models, and application case studies.

Inference with HunyuanWorld-Voyager on AMD Instinct GPUs

Learn how to run inference with HunyuanWorld-Voyager, a state-of-the-art 3D world model, on AMD Instinct MI300X GPUs using ROCm for efficient video generation and prediction.

Accelerating AI-Driven Crystalline Materials Design with MatterGen on AMD Instinct MI300X

Learn how to run a generative model for inorganic material design with MatterGen on MI300X.

AMD Enterprise AI Suite: Open Infrastructure for Production AI

Explore an open, GPU-optimized platform to build, deploy, and scale enterprise AI workloads on AMD Instinct with production-ready performance.

AMD Inference Microservice (AIM): Production Ready Inference on AMD Instinct™ GPUs

Learn how AIM delivers efficient, scalable inference on AMD Instinct GPUs and see how it simplifies deployment, optimization, and operations.

Plug-and-Play CuPy on ROCm: Data Analytics Acceleration Made Simple

Learn about how to enhance your analytics project with the latest AMD CuPy release.

Reproducing AMD MLPerf Training v5.1 Submission Result

Learn how to reproduce AMD's MLPerf Training v5.1 submission result.

Technical Dive into AMD MLPerf Training v5.1 Submission

Learn about the technical details of how AMD achieved the results in the MLPerf Training v5.1 submission.

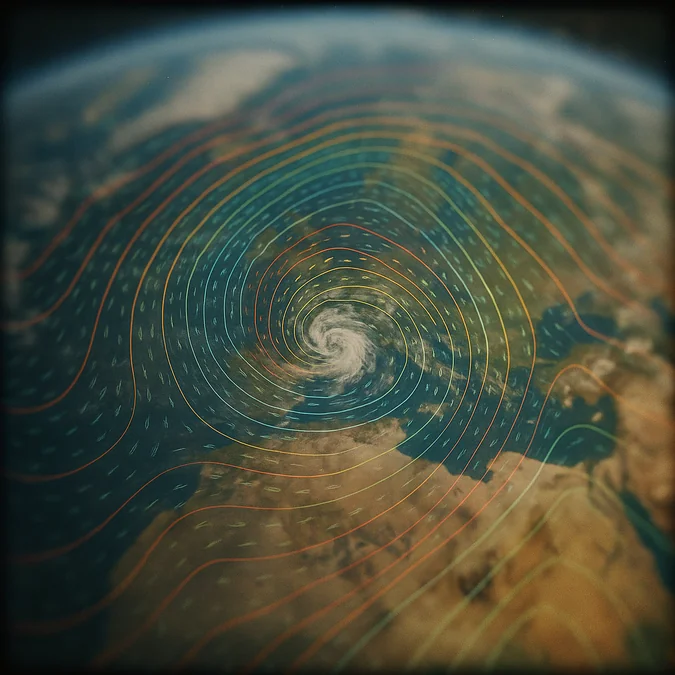

Training AI Weather Forecasting Models on AMD Instinct

Learn how deterministic and generative AI models for synoptic-scale weather forecasting are trained efficiently on AMD Instinct MI300X GPUs using the ROCm and GeoArches tools.

Day 0 Developer Guide: hipBLASLt Offline GEMM Tuning Script

Learn how to improve model performance with hipBLASLt offline tuning in our easy-to-use Day 0 tool for developers to optimize GEMM efficiency

Retrieval Augmented Generation (RAG) with vLLM, LangChain and Chroma

Learn AI-powered knowledge retrieval that enriches prompts with proprietary data to deliver accurate and context-aware answers

Nitro-E: A 304M Diffusion Transformer Model for High Quality Image Generation

Nitro-E is an extremely lightweight diffusion transformer model for high-quality image generation with only 304M paramters.

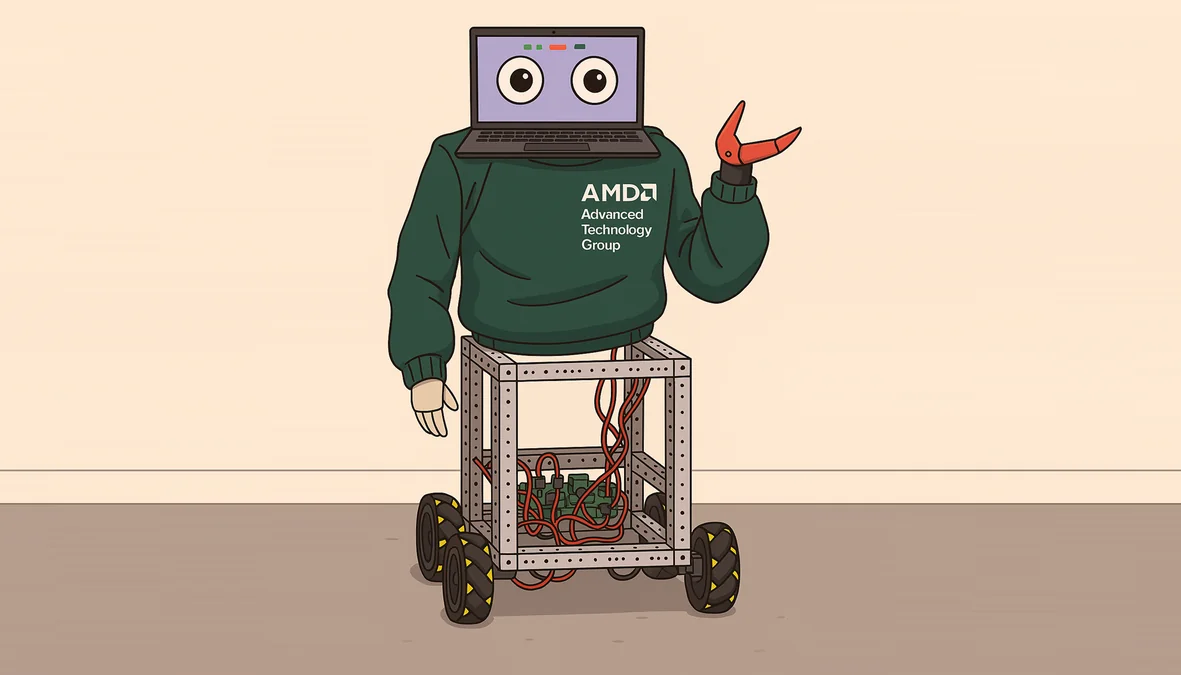

STX-B0T: Real-time AI Robot Assistant Powered by RyzenAI and ROCm

STX-B0T explores the potential of RyzenAI PCs to power robotics applications on NPU+GPU. This blog demonstrates how our hardware and software interoperate to unlock real-time perception.