Xuanwu Yin#

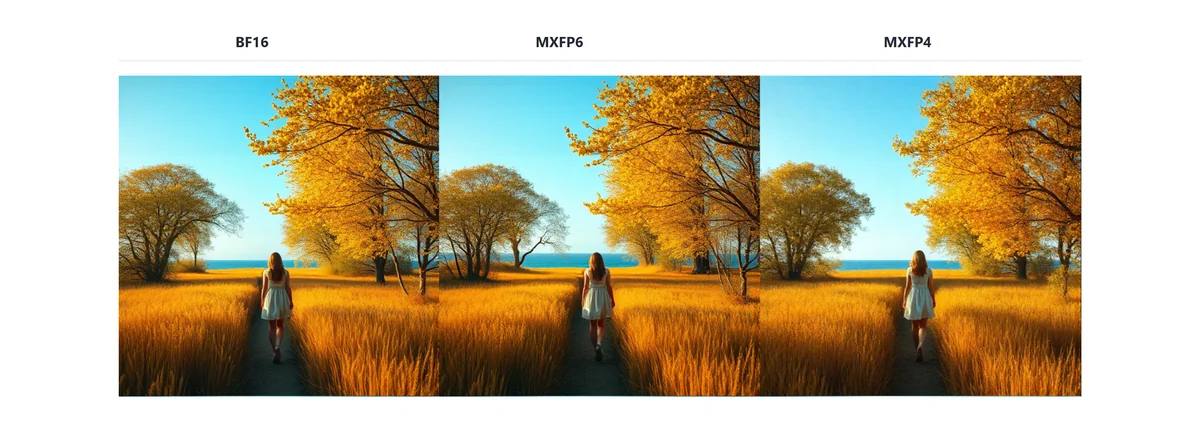

Xuanwu Yin leads the model optimization team, driving work on model quantization, sparsity, speculative decoding, and efficient training/inference across multiple platforms. His team delivers high-performance, production-ready solutions for large language models, vision-language models, and image/video-generation pipelines, while providing direct support to customers.

Posts by Xuanwu Yin