AI - Applications & Models - Page 4#

Plug-and-Play CuPy on ROCm: Data Analytics Acceleration Made Simple

Learn about how to enhance your analytics project with the latest AMD CuPy release.

Reproducing AMD MLPerf Training v5.1 Submission Result

Learn how to reproduce AMD's MLPerf Training v5.1 submission result.

Technical Dive into AMD MLPerf Training v5.1 Submission

Learn about the technical details of how AMD achieved the results in the MLPerf Training v5.1 submission.

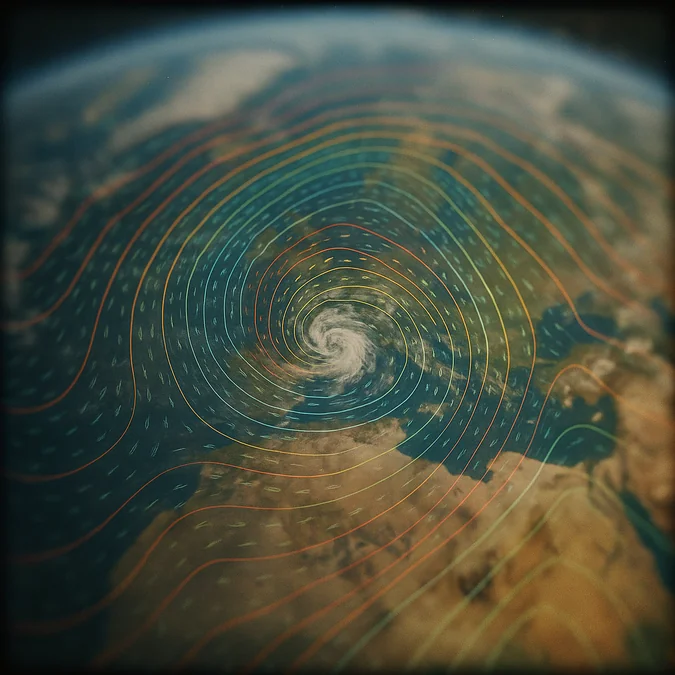

Training AI Weather Forecasting Models on AMD Instinct

Learn how deterministic and generative AI models for synoptic-scale weather forecasting are trained efficiently on AMD Instinct MI300X GPUs using the ROCm and GeoArches tools.

Day 0 Developer Guide: hipBLASLt Offline GEMM Tuning Script

Learn how to improve model performance with hipBLASLt offline tuning in our easy-to-use Day 0 tool for developers to optimize GEMM efficiency

Nitro-E: A 304M Diffusion Transformer Model for High Quality Image Generation

Nitro-E is an extremely lightweight diffusion transformer model for high-quality image generation with only 304M paramters.

STX-B0T: Real-time AI Robot Assistant Powered by RyzenAI and ROCm

STX-B0T explores the potential of RyzenAI PCs to power robotics applications on NPU+GPU. This blog demonstrates how our hardware and software interoperate to unlock real-time perception.

Empowering Developers to Build a Robust PyTorch Ecosystem on AMD ROCm™ with Better Insights and Monitoring

Production ROCm support for N-1 to N+1 PyTorch releases is in progress. The AI Software Head-Up Dashboard shows status of PyTorch on ROCm.

Kimi-K2-Instruct: Enhanced Out-of-the-Box Performance on AMD Instinct MI355 Series GPUs

Learn how AMD Instinct MI355 Series GPUs deliver competitive Kimi-K2 inference with faster TTFT, lower latency, and strong throughput.

Announcing MONAI 1.0.0 for AMD ROCm: Breakthrough AI Acceleration for Medical Imaging Models on AMD Instinct™ GPUs

Learn how to use Medical Open Network for Artificial Intelligence (MONAI) 1.0 on ROCm, with examples and demonstrations.

Medical Imaging on MI300X: Optimized SwinUNETR for Tumor Detection

Learn how to setup, run and optimize SwinUNETR on AMD MI300X GPUs for fast medical imaging 3D segmentation of tumors using fast, large ROIs.

Optimizing FP4 Mixed-Precision Inference with Petit on AMD Instinct MI250 and MI300 GPUs: A Developer’s Perspective

Learn how FP4 mixed-precision on AMD GPUs boosts inference speed and integrates seamlessly with SGLang.