Software tools & optimizations - Page 4#

Discover the latest blogs about ROCm software tools, libraries, and performance optimizations to help you get the most out of your AMD hardware.

Primus: A Lightweight, Unified Training Framework for Large Models on AMD GPUs

Primus streamlines LLM training on AMD GPUs with unified configs, multi-backend support, preflight validation, and structured logging.

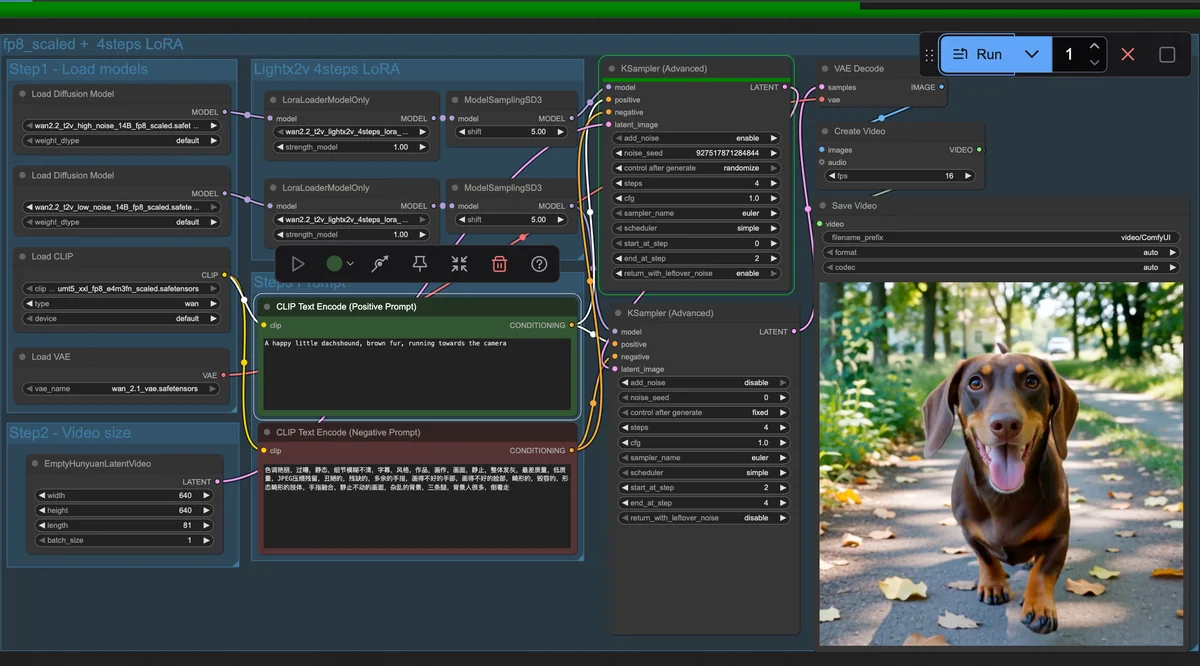

Running ComfyUI on AMD Instinct

This blog shows how to deploy ComfyUI on AMD Instinct GPUs. The blog explains what ComfyUI is and how it works.

Performance Profiling on AMD GPUs – Part 2: Basic Usage

Part 2 of our GPU profiling series guides beginners through practical steps to identify and optimize kernel bottlenecks using ROCm tools

Running ComfyUI in Windows with ROCm on WSL

Run ComfyUI on Windows with ROCm and WSL to harness Radeon GPU power for local AI tasks like Stable Diffusion—no dual-boot needed

GEAK: Introducing Triton Kernel AI Agent & Evaluation Benchmarks

AMD introduces GEAK, an AI agent for generating optimized Triton GPU kernels, achieving up to 63% accuracy and up to 2.59× speedups on MI300X GPUs.

Avoiding LDS Bank Conflicts on AMD GPUs Using CK-Tile Framework

This blog shows how CK-Tile’s XOR-based swizzle optimizes shared memory access in GEMM kernels on AMD GPUs by eliminating LDS bank conflicts

Chain-of-Thought Guided Visual Reasoning Using Llama 3.2 on a Single AMD Instinct MI300X GPU

Fine-tune Llama 3.2 Vision models on AMD MI300X GPU using Torchtune, achieving 2.3× better accuracy with 11B vs 90B model on chart-based tasks.

Introducing ROCm-LS: Accelerating Life Science Workloads with AMD Instinct™ GPUs

Accelerate life science and medical workloads with ROCm-LS, AMDs GPU-optimized toolkit for faster multidimensional image processing and vision.

Announcing hipCIM: A Cutting-Edge Solution for Accelerated Multidimensional Image Processing

Fully utilize the power of AMDs Instinct GPUs to process and interpret detailed multidimensional images with lightning speed.

vLLM V1 Meets AMD Instinct GPUs: A New Era for LLM Inference Performance

vLLM v1 on AMD ROCm boosts LLM serving with faster TTFT, higher throughput, and optimized multimodal support—ready out of the box.

Accelerated LLM Inference on AMD Instinct™ GPUs with vLLM 0.9.x and ROCm

vLLM v0.9.x is here with major ROCm™ optimizations—boosting LLM performance, reducing latency, and expanding model support on AMD Instinct™ GPUs.

Performance Profiling on AMD GPUs – Part 1: Foundations

Part 1 of our GPU profiling series introduces ROCm tools, setup steps, and key concepts to prepare you for deeper dives in the posts to follow.