Applications & models#

Explore the latest blogs about applications and models in the ROCm ecosystem, including machine learning frameworks, AI models, and application case studies.

PyTorch Offline Tuning with TunableOp

Learn how to accelerate PyTorch workloads with TunableOp offline tuning—record, tune separately, and deploy faster inference.

LuminaSFT: Generating Synthetic Fine-Tuning Data for Small Language Models

Learn how task-specific synthetic data can improve small language model performance and explore results from the LuminaSFT study.

Unlocking Sparse Acceleration on AMD GPUs with hipSPARSELt

This blog post introduces semi-structured sparsity technology supported on AMD systems and explains how to use the corresponding library to leverage its benefit.

Reinforcement Learning from Human Feedback on AMD GPUs with verl and ROCm 7.0.0

Deploy verl on AMD GPUs for fast, scalable RLHF training with ROCm optimization, Docker scripts, and strong throughput and convergence results

Solution Blueprints: Accelerating AI Deployment with AMD Enterprise AI

This blog presents AIMs Solution Blueprints and demonstrates modular, Helm‑based deployment patterns.

Digital Twins on AMD: Building Robotic Simulations Using Edge AI PCs

Explore how Ryzen AI MAX enables robotic simulation on a single AI PC and take your first step into digital twins.

Resilient Large-Scale Training: Integrating TorchFT with TorchTitan on AMD GPUs

Achieve resilient, checkpoint-less distributed training on AMD GPUs by integrating TorchFT with TorchTitan on Primus-SaFE.

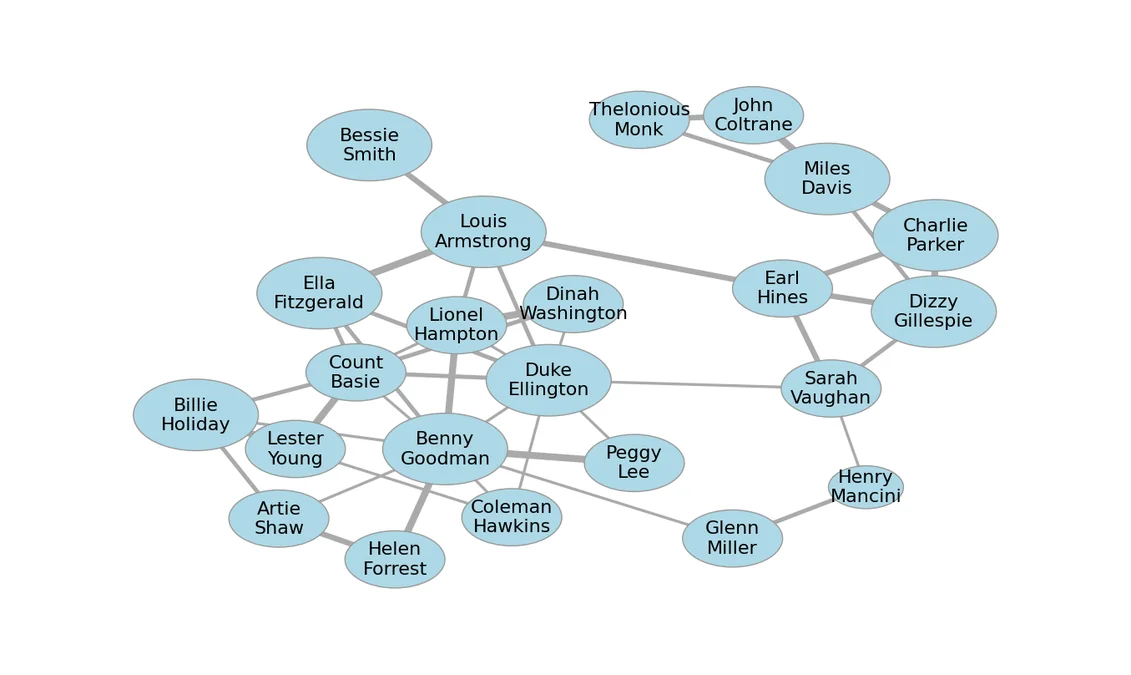

Accelerating Graph Layout with AI and ROCm on AMD GPUs

Case study of using AI coding agents to optimize graph layout using GPUs.

Micro-World: First AMD Open-Source World Models for Interactive Video Generation

Micro-World is an action-controlled interactive world model designed to generate high-quality, open-domain scenes.

Foundations of Molecular Generation with GP-MoLFormer on AMD Instinct MI300X Accelerators

Explore molecular generation with GP-MoLFormer on AMD MI300X GPUs, including sequence-based modeling, inference, and property-guided design.

Nitro-AR: A Compact AR Transformer for High-Quality Image Generation

Nitro-AR is a compact E-MMDiT-based masked AR image generator matching diffusion quality with lower latency on AMD GPUs.

Applying Compute Partitioning for Workloads on MI300X GPUs

Learn how to boost MI300X performance using GPU Compute partitioning for parallel workloads like GROMACS and REINVENT