Applications & models - Page 2#

Explore the latest blogs about applications and models in the ROCm ecosystem, including machine learning frameworks, AI models, and application case studies.

Installing AMD HIP-Enabled GROMACS on HPC Systems: A LUMI Supercomputer Case Study

Installing AMD HIP-Enabled GROMACS on HPC Systems: A LUMI Supercomputer Case Study

Athena-PRM: Enhancing Multimodal Reasoning with Data-Efficient Process Reward Models

Learn how to utilize a data-efficient Process Reward Model to enhance the reasoning ability of the Large Language/Multimodal Models.

Bridging the Last Mile: Deploying Hummingbird-XT for Efficient Video Generation on AMD Consumer-Grade Platforms

Learn how to use Hummingbird-XT and Hummingbird-XTX modelS to generate videos. Explore the video diffusion model acceleration solution, including dit distillation method and light VAE model.

Using Gradient Boosting Libraries on MI300X for Financial Risk Prediction

This blog shows how to run LightGBM and ThunderGBM GPU-accelerated training on AMD Instinct MI300X GPUs with ROCm for finance focused workloads.

High-Resolution Weather Forecasting with StormCast on AMD Instinct GPU Accelerators

A showcase for how to run high-resolution weather prediction models such as StormCast on AMD Instinct hardware.

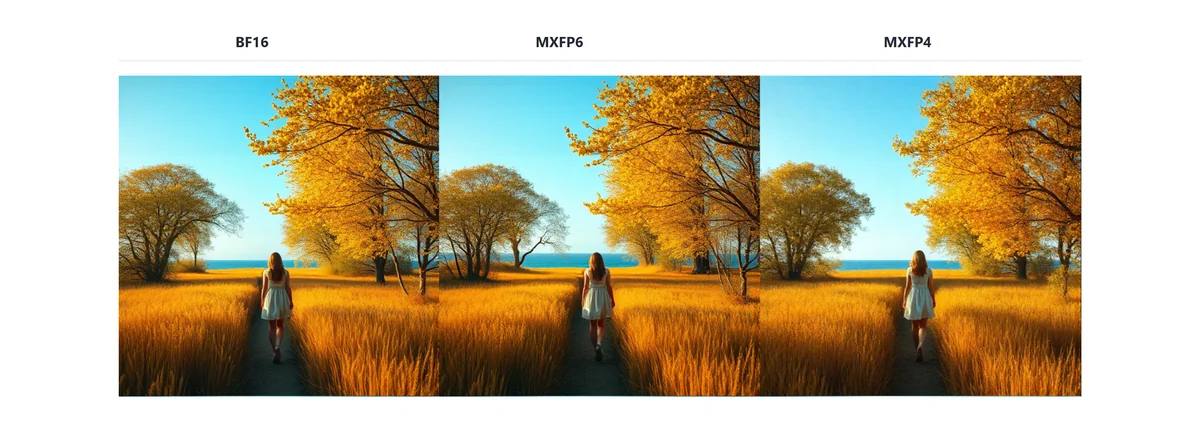

Breaking the Accuracy-Speed Barrier: How MXFP4/6 Quantization Revolutionizes Image and Video Generation

Explore how MXFP4/6, supported by AMD Instinct™ MI350 series GPUs, achieves BF16-comparable image and video generation quality.

ROCm Fork of MaxText: Structure and Strategy

Learn how the ROCm fork of MaxText mirrors upstream while enabling offline testing, minimal datasets, and platform-agnostic, decoupled workflows.

ROCm MaxText Testing — Decoupled (Offline) and Cloud-Integrated Modes

Learn how to run MaxText unit tests on AMD ROCm GPUs in offline and cloud modes for fast validation, clear reports, and reproducible workflows.

SparK: Query-Aware Unstructured Sparsity with Recoverable KV Cache Channel Pruning

In this blog we will discuss SparK, a training-free, plug-and-play method for KV cache compression in large language models (LLMs).

GEAK-Triton v2 Family of AI Agents: Kernel Optimization for AMD Instinct GPUs

Introducing GEAK Family - AI-driven agents that automate GPU kernel optimization for AMD Instinct GPUs with hardware-aware feedback

A Step-by-Step Walkthrough of Decentralized LLM Training on AMD GPUs

Learn how to train LLMs across decentralized clusters on AMD Instinct MI300 GPUs with DiLoCo and Prime—scale beyond one datacenter.

Medical Imaging on MI300X: SwinUNETR Inference Optimization

A practical guide to optimizing SwinUNETR inference on AMD Instinct™ MI300X GPUs for fast 3D segmentation of tumors in medical imaging.