AI - Applications & Models#

PyTorch Offline Tuning with TunableOp

Learn how to accelerate PyTorch workloads with TunableOp offline tuning—record, tune separately, and deploy faster inference.

LuminaSFT: Generating Synthetic Fine-Tuning Data for Small Language Models

Learn how task-specific synthetic data can improve small language model performance and explore results from the LuminaSFT study.

Unlocking Sparse Acceleration on AMD GPUs with hipSPARSELt

This blog post introduces semi-structured sparsity technology supported on AMD systems and explains how to use the corresponding library to leverage its benefit.

Solution Blueprints: Accelerating AI Deployment with AMD Enterprise AI

This blog presents AIMs Solution Blueprints and demonstrates modular, Helm‑based deployment patterns.

Digital Twins on AMD: Building Robotic Simulations Using Edge AI PCs

Explore how Ryzen AI MAX enables robotic simulation on a single AI PC and take your first step into digital twins.

Resilient Large-Scale Training: Integrating TorchFT with TorchTitan on AMD GPUs

Achieve resilient, checkpoint-less distributed training on AMD GPUs by integrating TorchFT with TorchTitan on Primus-SaFE.

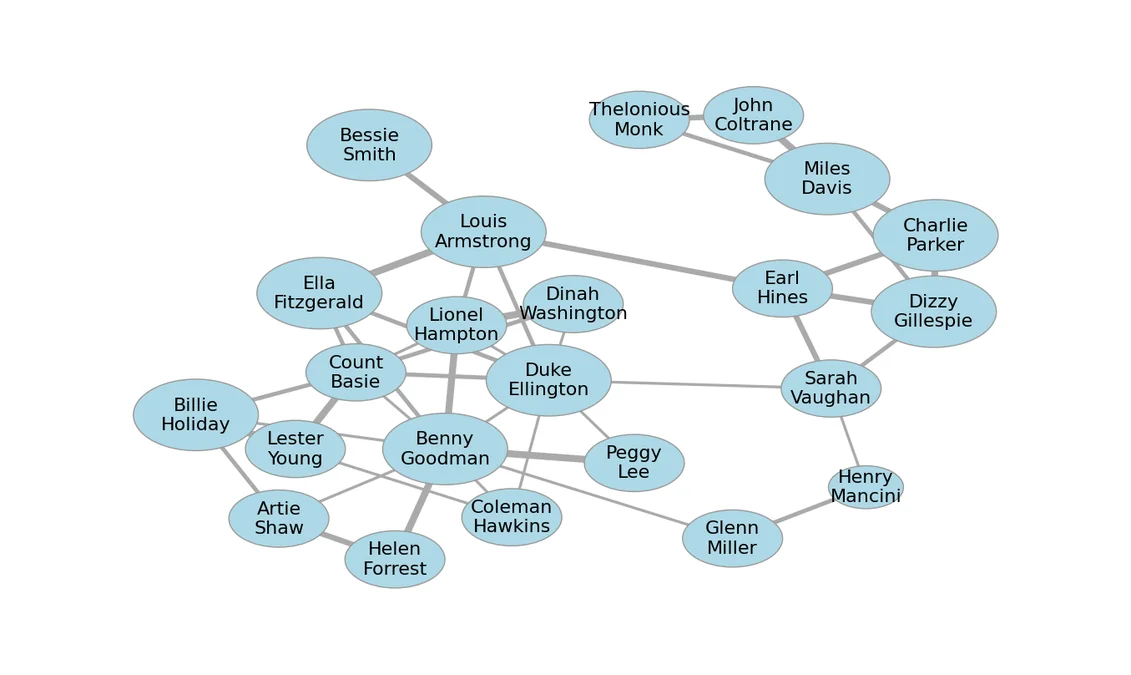

Accelerating Graph Layout with AI and ROCm on AMD GPUs

Case study of using AI coding agents to optimize graph layout using GPUs.

Micro-World: First AMD Open-Source World Models for Interactive Video Generation

Micro-World is an action-controlled interactive world model designed to generate high-quality, open-domain scenes.

Foundations of Molecular Generation with GP-MoLFormer on AMD Instinct MI300X Accelerators

Explore molecular generation with GP-MoLFormer on AMD MI300X GPUs, including sequence-based modeling, inference, and property-guided design.

Nitro-AR: A Compact AR Transformer for High-Quality Image Generation

Nitro-AR is a compact E-MMDiT-based masked AR image generator matching diffusion quality with lower latency on AMD GPUs.

Athena-PRM: Enhancing Multimodal Reasoning with Data-Efficient Process Reward Models

Learn how to utilize a data-efficient Process Reward Model to enhance the reasoning ability of the Large Language/Multimodal Models.

Bridging the Last Mile: Deploying Hummingbird-XT for Efficient Video Generation on AMD Consumer-Grade Platforms

Learn how to use Hummingbird-XT and Hummingbird-XTX modelS to generate videos. Explore the video diffusion model acceleration solution, including dit distillation method and light VAE model.