AI Blogs - Page 3#

Athena-PRM: Enhancing Multimodal Reasoning with Data-Efficient Process Reward Models

Learn how to utilize a data-efficient Process Reward Model to enhance the reasoning ability of the Large Language/Multimodal Models.

Bridging the Last Mile: Deploying Hummingbird-XT for Efficient Video Generation on AMD Consumer-Grade Platforms

Learn how to use Hummingbird-XT and Hummingbird-XTX modelS to generate videos. Explore the video diffusion model acceleration solution, including dit distillation method and light VAE model.

Introducing the AMD Network Operator v1.0.0: Simplifying High-Performance Networking for AMD Platforms

Introducing the AMD Network Operator for automating high-performance AI NIC networking in Kubernetes for AI and HPC workloads

High-Resolution Weather Forecasting with StormCast on AMD Instinct GPU Accelerators

A showcase for how to run high-resolution weather prediction models such as StormCast on AMD Instinct hardware.

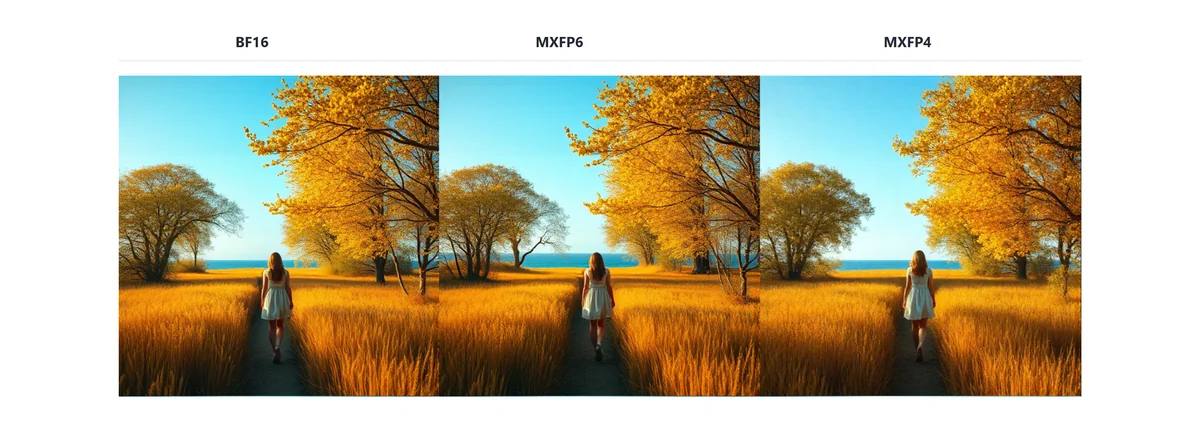

Breaking the Accuracy-Speed Barrier: How MXFP4/6 Quantization Revolutionizes Image and Video Generation

Explore how MXFP4/6, supported by AMD Instinct™ MI350 series GPUs, achieves BF16-comparable image and video generation quality.

ROCm Fork of MaxText: Structure and Strategy

Learn how the ROCm fork of MaxText mirrors upstream while enabling offline testing, minimal datasets, and platform-agnostic, decoupled workflows.

ROCm MaxText Testing — Decoupled (Offline) and Cloud-Integrated Modes

Learn how to run MaxText unit tests on AMD ROCm GPUs in offline and cloud modes for fast validation, clear reports, and reproducible workflows.

SparK: Query-Aware Unstructured Sparsity with Recoverable KV Cache Channel Pruning

In this blog we will discuss SparK, a training-free, plug-and-play method for KV cache compression in large language models (LLMs).

Accelerating Multimodal Inference in vLLM: The One-Line Optimization for Large Multimodal Models

Learn how to optimize multimodal model inference with batch-level data parallelism for vision encoders in vLLM, achieving up to 45% throughput gains on AMD MI300X.

GEAK-Triton v2 Family of AI Agents: Kernel Optimization for AMD Instinct GPUs

Introducing GEAK Family - AI-driven agents that automate GPU kernel optimization for AMD Instinct GPUs with hardware-aware feedback

Getting Started with AMD AI Workbench: Deploying and Managing AI Workloads

Learn how to deploy and manage AI workloads with AMD AI Workbench, a low-code interface for developers to manage AI inference deployments

A Step-by-Step Walkthrough of Decentralized LLM Training on AMD GPUs

Learn how to train LLMs across decentralized clusters on AMD Instinct MI300 GPUs with DiLoCo and Prime—scale beyond one datacenter.