Developers Blogs#

LuminaSFT: Generating Synthetic Fine-Tuning Data for Small Language Models

Learn how task-specific synthetic data can improve small language model performance and explore results from the LuminaSFT study.

Primus-Pipeline: A More Flexible and Scalable Pipeline Parallelism Implementation

Learn how to use our flexible and scalable pipeline parallelism framework with Primus backend and AMD hardware.

FlyDSL: Expert GPU Kernel Development with the Ease of MLIR Python Native DSL on AMD GPUs

FlyDSL is a Python-first, MLIR-native DSL for expert GPU kernel development and tuning on AMD GPUs.

Introducing hipThreads: A C++ - Style Concurrency Library for AMD GPUs

Discover how hipThreads lets you write hip::thread just like std::thread and unlock GPU acceleration with minimal code changes.

Unlocking Sparse Acceleration on AMD GPUs with hipSPARSELt

This blog post introduces semi-structured sparsity technology supported on AMD systems and explains how to use the corresponding library to leverage its benefit.

Digital Twins on AMD: Building Robotic Simulations Using Edge AI PCs

Explore how Ryzen AI MAX enables robotic simulation on a single AI PC and take your first step into digital twins.

Building Robotics Applications with Ryzen AI and ROS 2

This blog post gives a walkthrough of how to deploy a robotics application on the AI PC integrated with ROS - the robot operating system. We showcase Ryzen AI CVML Library to do perception tasks like depth estimation and develop a custom ROS 2 node which allows easy integration with the ROS ecosystem and standard components.

Resilient Large-Scale Training: Integrating TorchFT with TorchTitan on AMD GPUs

Achieve resilient, checkpoint-less distributed training on AMD GPUs by integrating TorchFT with TorchTitan on Primus-SaFE.

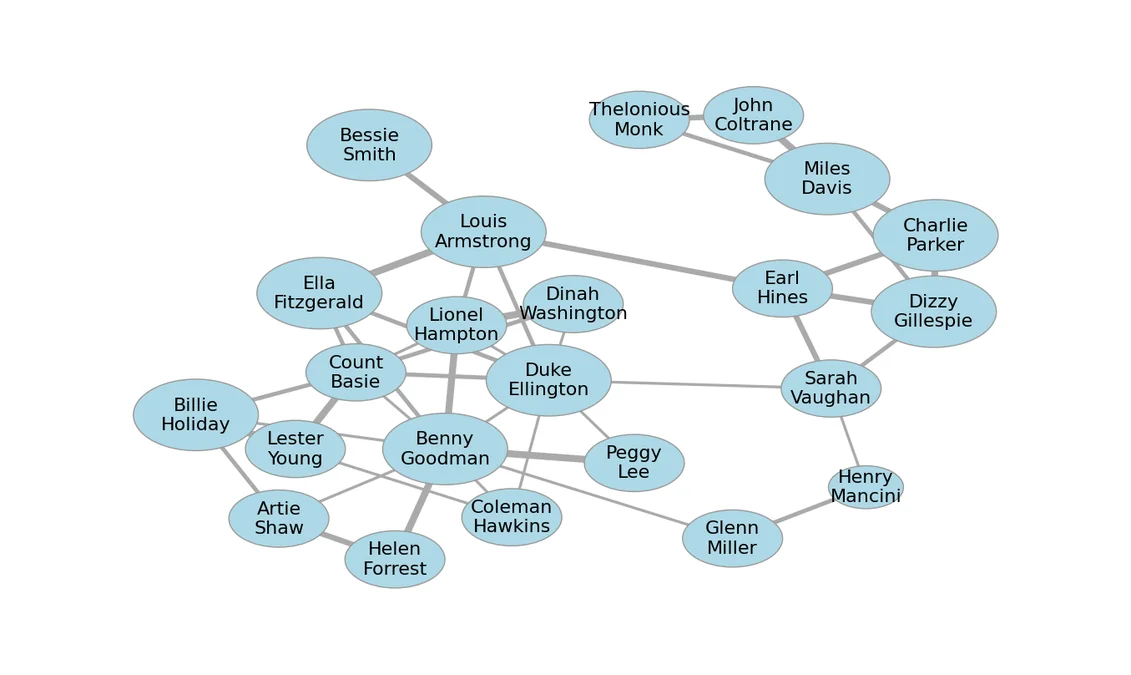

Accelerating Graph Layout with AI and ROCm on AMD GPUs

Case study of using AI coding agents to optimize graph layout using GPUs.

Debugging NaN Results in CK Tile GEMM: A rocgdb Detective Story

Learn GPU kernel debugging with rocgdb through a real case: tracing NaN outputs to a one-character typo in CK Tile GEMM

ROCm 7.2: Smarter, Faster, and More Scalable for Modern AI Workloads

we highlight the latest ROCm 7.2 enhancements for AMD Instinct GPUs, designed to boost AI and HPC performance

ROCm Becomes a First-Class Platform in the vLLM Ecosystem

ROCm is now a first-class vLLM platform: official wheels + Docker, stronger CI, and faster LLM & multimodal inference on AMD Instinct GPUs.