Recent Posts - Page 2#

Elevate Your LLM Inference: Autoscaling with Ray, ROCm 7.0.0, and SkyPilot

Learn how to use multi-node and multi-cluster autoscaling in the Ray framework on ROCm 7.0.0 with SkyPilot

Reinforcement Learning from Human Feedback on AMD GPUs with verl and ROCm 7.0.0

Deploy verl on AMD GPUs for fast, scalable RLHF training with ROCm optimization, Docker scripts, and strong throughput and convergence results

Solution Blueprints: Accelerating AI Deployment with AMD Enterprise AI

This blog presents AIMs Solution Blueprints and demonstrates modular, Helm‑based deployment patterns.

Digital Twins on AMD: Building Robotic Simulations Using Edge AI PCs

Explore how Ryzen AI MAX enables robotic simulation on a single AI PC and take your first step into digital twins.

Building Robotics Applications with Ryzen AI and ROS 2

This blog post gives a walkthrough of how to deploy a robotics application on the AI PC integrated with ROS - the robot operating system. We showcase Ryzen AI CVML Library to do perception tasks like depth estimation and develop a custom ROS 2 node which allows easy integration with the ROS ecosystem and standard components.

Resilient Large-Scale Training: Integrating TorchFT with TorchTitan on AMD GPUs

Achieve resilient, checkpoint-less distributed training on AMD GPUs by integrating TorchFT with TorchTitan on Primus-SaFE.

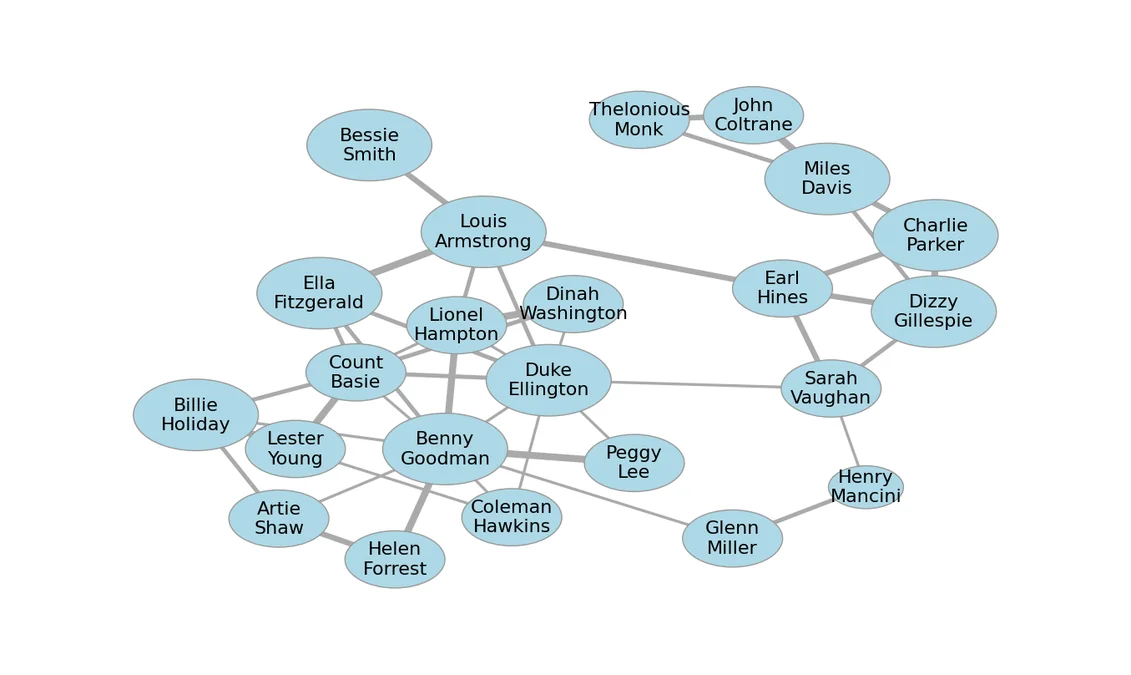

Accelerating Graph Layout with AI and ROCm on AMD GPUs

Case study of using AI coding agents to optimize graph layout using GPUs.

Micro-World: First AMD Open-Source World Models for Interactive Video Generation

Micro-World is an action-controlled interactive world model designed to generate high-quality, open-domain scenes.

Foundations of Molecular Generation with GP-MoLFormer on AMD Instinct MI300X Accelerators

Explore molecular generation with GP-MoLFormer on AMD MI300X GPUs, including sequence-based modeling, inference, and property-guided design.

Debugging NaN Results in CK Tile GEMM: A rocgdb Detective Story

Learn GPU kernel debugging with rocgdb through a real case: tracing NaN outputs to a one-character typo in CK Tile GEMM

Nitro-AR: A Compact AR Transformer for High-Quality Image Generation

Nitro-AR is a compact E-MMDiT-based masked AR image generator matching diffusion quality with lower latency on AMD GPUs.

LLM Inference Optimization Using AMD GPU Partitioning

Demonstrate how to leverage compute and memory partitioning features in ROCm to scale model serving.