Recent Posts - Page 6#

Retrieval Augmented Generation (RAG) with vLLM, LangChain and Chroma

Learn AI-powered knowledge retrieval that enriches prompts with proprietary data to deliver accurate and context-aware answers

Stability at Scale: AMD’s Full‑Stack Platform for Large‑Model Training

Primus streamlines LLM training on AMD GPUs with unified configs, multi-backend support, preflight validation, and structured logging.

High-Accuracy MXFP4, MXFP6, and Mixed-Precision Models on AMD GPUs

Learn to leverage AMD Quark for efficient MXFP4/MXFP6 quantization on AMD Instinct accelerators with high accuracy retention.

Nitro-E: A 304M Diffusion Transformer Model for High Quality Image Generation

Nitro-E is an extremely lightweight diffusion transformer model for high-quality image generation with only 304M paramters.

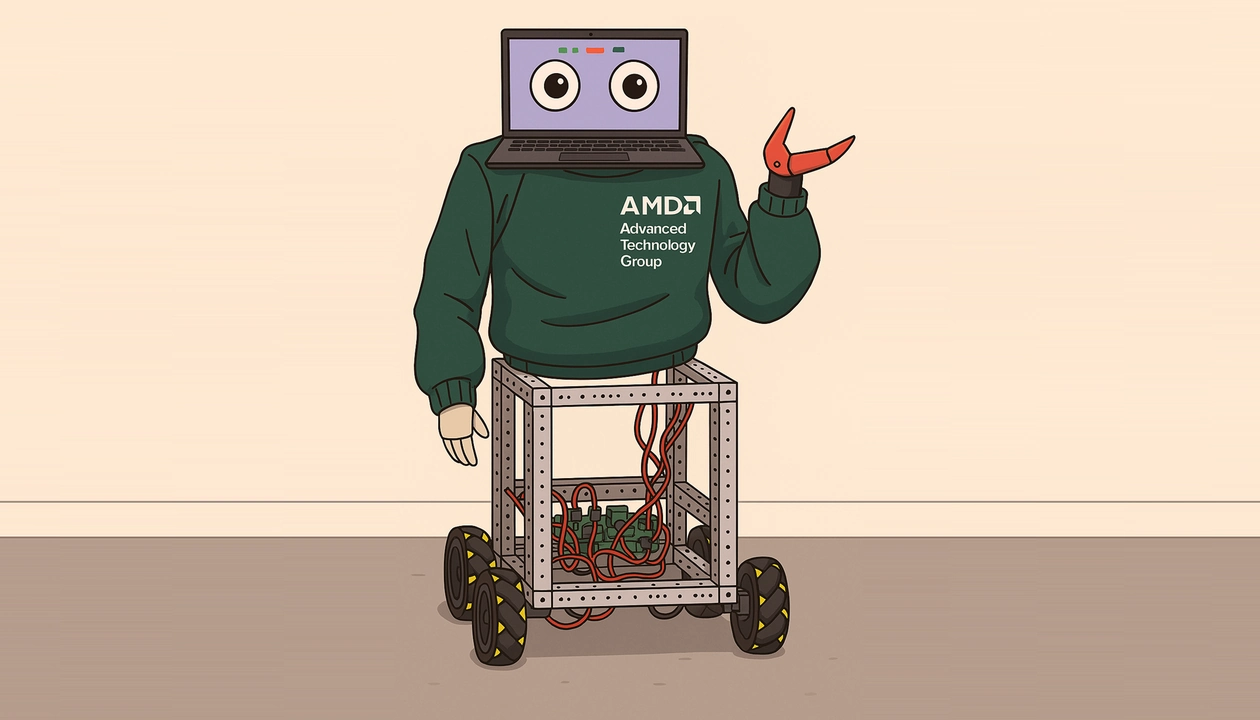

STX-B0T: Real-time AI Robot Assistant Powered by RyzenAI and ROCm

STX-B0T explores the potential of RyzenAI PCs to power robotics applications on NPU+GPU. This blog demonstrates how our hardware and software interoperate to unlock real-time perception.

Performance Profiling on AMD GPUs - Part 3: Advanced Usage

Part 3 of our GPU profiling series guides beginners through practical steps to identify and optimize kernel bottlenecks using ROCm tools

Empowering Developers to Build a Robust PyTorch Ecosystem on AMD ROCm™ with Better Insights and Monitoring

Production ROCm support for N-1 to N+1 PyTorch releases is in progress. The AI Software Head-Up Dashboard shows status of PyTorch on ROCm.

ROCm 7.9 Technology Preview: ROCm Core SDK and TheRock Build System

Introduce ROCm Core SDK, and learn to install and build ROCm components easily using TheRock.

Kimi-K2-Instruct: Enhanced Out-of-the-Box Performance on AMD Instinct MI355 Series GPUs

Learn how AMD Instinct MI355 Series GPUs deliver competitive Kimi-K2 inference with faster TTFT, lower latency, and strong throughput.

Gumiho: A New Paradigm for Speculative Decoding — Earlier Tokens in a Draft Sequence Matter More

Gumiho boosts LLM inference with early-token accuracy, blending serial + parallel decoding for speed, accuracy, and ROCm-optimized deployment.

GEMM Tuning within hipBLASLt– Part 2

Learn how to use hipblaslt-bench for offline GEMM tuning in hipBLASLt—benchmark, save, and apply custom-tuned kernels at runtime.

Announcing MONAI 1.0.0 for AMD ROCm: Breakthrough AI Acceleration for Medical Imaging Models on AMD Instinct™ GPUs

Learn how to use Medical Open Network for Artificial Intelligence (MONAI) 1.0 on ROCm, with examples and demonstrations.